AI models working together like a team? It might sound far-fetched, but that’s exactly what a new research development is promising. I just ran across, Tokyo-based startup Sakana AI (fresh off a $30M seed funding round 1) has unveiled an approach that allows multiple AI models to cooperate in real time on the same task. That sounds pretty sick.

In essence, instead of one giant Artificial Intelligence (AI) system handling a problem, you have several Large Language Models (LLMs) teaming up – each contributing its strengths to crack the challenge collectively. The method, inspired by how nature’s swarms solve problems, is built on a novel algorithm called Adaptive Branching Monte Carlo Tree Search (AB-MCTS) 2.

Think of it as the 1992 United States Mens Olympic Basketball team aka the “Dream Team” of AI agents working together: the algorithm enables trial-and-error collaboration among the models, so they can bounce ideas off each other (so to speak) and combine their unique skills to solve problems that stumped any single model.

LLM Swarm Intelligence in Action

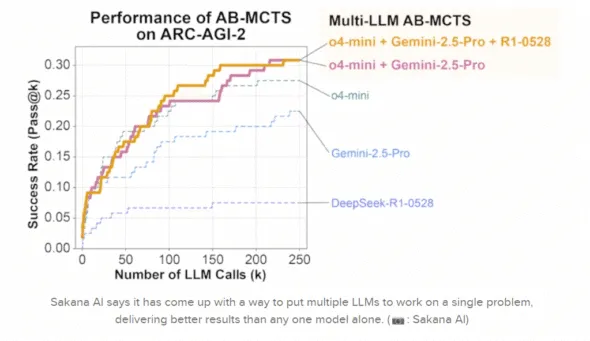

Rather than merging multiple models into one, Sakana’s technique keeps each model separate and lets them coordinate their efforts – mimicking swarm intelligence (much like a school of fish or a hive of bees) 3. The company’s evolutionary computing roots show here: Sakana’s very name means “fish” in Japanese, and its logo features a school of fish banding together to form a larger shape 4. This nature-inspired philosophy guided their earlier experiments in “evolutionary model merging,” and now it’s about getting models to collaborate on-the-fly at inference time. The early results are impressive. In tests, a trio of cutting-edge models (one each from OpenAI, Google, and DeepSeek) tackled a notoriously difficult reasoning benchmark (ARC-AGI-2) using AB-MCTS – and the collective outperformed any of the individual models by a wide margin 5 3. In fact, Sakana reports that this “LLM teamwork” achieved results no single model could: the multi-model ensemble solved over 30% of the test tasks, significantly better than any model did alone 3. Notably, this was done without any retraining or fine-tuning of the models – the coordination itself provided the boost, leveraging each model’s diversity as a feature, not a bug 3.

Figure: Performance on the ARC-AGI-2 reasoning benchmark when models work together. Sakana AI’s multi-LLM AB-MCTS approach (orange line) quickly achieves a higher success rate (Pass@k) than any single model alone (blue-ish and violet-ish lines) 3. The chart, one of several from Sakana’s blog, vividly shows how the swarm of models outpaces individual efforts – a case where many heads are indeed better than one.

It’s worth emphasizing how novel this is: the AI models are collaborating at inference time (when you ask them a question), not just during training. Typically, if you wanted the strength of multiple models, you might ensemble their outputs after the fact or train a bigger model combining them. Here, the models remain separate but engage in a coordinated problem-solving process in real time. They effectively “converse” through the AB-MCTS algorithm – trying different approaches, refining partial solutions, and even handing off subtasks to whichever model is best suited 6. (As a fun aside, the Sakana team’s blog is full of great diagrams of this process, and I have to say, their visuals and cover photos make the technical details surprisingly digestible and pretty cool to look at!)

From Transformer Revolution to AI Teamwork

One reason this development has the AI world buzzing is who’s behind it. Sakana AI’s founding team includes Llion Jones, a former Google researcher who was a co-author of the landmark 2017 paper “Attention Is All You Need” 7. That paper introduced the Transformer architecture – the very backbone of Generative AI (Gen AI) as we know it today (it’s the technology that powers GPT-4, ChatGPT, and virtually all modern LLMs). In other words, one of the folks who helped ignite the current AI revolution is now helping chart its next evolution. Jones (now CTO at Sakana) and co-founder David Ha left Google to pursue a fresh idea: instead of endlessly scaling up one monolithic model, why not make many models work together? In fact, Sakana told Reuters that their goal is to have “a large number of smaller models communicate and work together rather than creating one giant model”.

Supercharge Your Workflow with Generative AI

Curious how these AI innovations could streamline your business? Schedule a free “Using Generative AI to Optimize Your Processes” presentation with Killer Logic. We’ll discuss your goals and identify real opportunities to apply AI for efficiency and impact – offering candid, practical insights (no sales gimmicks). Whether we implement it, you tackle it in-house, or you take our ideas elsewhere, you’ll come away with actionable next steps. Let’s explore how a smarter, leaner approach to tech can deliver something killer for you.

This philosophy harkens back to a simple truth: two heads are often better than one. As Sakana’s researchers put it, humanity’s greatest achievements – the Apollo program, the Internet, the Human Genome Project – came from collaboration and collective intelligence, not lone geniuses 8. They argue the same should hold for AI. Different models have different “biases” and specialties based on how they were trained. One might be great at coding, another at creative writing, another at logic. Rather than view those differences as limitations, Sakana sees them as “precious resources for creating collective intelligence”. By pooling multiple AIs, each bringing its own strengths, we can solve problems that no single model – no matter how advanced – could solve alone 3 6.

Importantly for businesses, this approach could also mean freedom from being locked into a single AI vendor or model. Organizations can dynamically leverage the best aspects of different models, assigning the right AI to the right part of a task – say, using a specialized open-source model for data extraction and a top-tier proprietary model for reasoning – to get superior results (6. It’s a modular strategy: use the ideal tool for each job, then let them coordinate. (This modular ethos aligns with our own consultative approach at Killer Logic – always choosing the right tech for each requirement rather than one-size-fits-all solutions blog.killerlogic.io) The fact that tech leaders like Llion Jones are championing this multi-LLM idea lends it credibility: the very inventors of the Transformer are now experimenting beyond the single-transformer paradigm. It feels like coming full circle – from enabling generative AI through Transformers to now potentially enabling collective AI through teamwork.

Why Collective AI Matters for Your Business

Okay, this is fascinating tech, but why should you care? For one, a collective AI approach could lead to more robust and reliable solutions in real-world use. Because multiple models cross-validate and build on each other’s outputs, the overall system can catch mistakes and fill knowledge gaps in ways a single model might miss. For example, the Sakana team observed instances where one model’s flawed answer was fixed by the others in the ensemble, leading to a correct solution that none of them could produce independently 6. In another case, they noted that hallucination (an AI making factual errors or “inventing” information) varies between models – so if you combine a highly logical but occasionally ungrounded model with another model that’s very factual, you can get the best of both worlds: strong reasoning and accurate, trustworthy outputs. In enterprise settings where AI accuracy is paramount (nobody wants a rogue AI confidently fabricating data in a report!), this team-of-AIs approach could be a game changer. The ability to mitigate hallucinations by having models double-check each other’s answers is a huge advantage.

From a development standpoint, leveraging multiple pre-existing models together also means you don’t have to train a huge new model from scratch to get state-of-the-art performance. That could translate to faster development cycles and lower costs. As one article put it, for businesses and researchers this approach could mean faster iteration, more resilient output, and dramatically better performance on complex tasks requiring reasoning, memory, or multi-step logic 3 . Instead of waiting on one model to be perfect at everything, you can compose a solution where each AI handles what it’s best at. This modular strategy is low-latency too – models can be orchestrated in parallel or sequence at inference time without the overhead of combining them into one giant system 3. And since models continually evolve, you could swap in a better model for a given component whenever it becomes available, without overhauling your whole AI pipeline. In short, it’s a very agile and future-proof way to build AI into your business processes.

Let’s put this into perspective with a couple of industry examples:

Example: Oil & Gas – Smarter Drilling Decisions

Imagine an oil and gas company planning its next drilling operation. This is a complex puzzle: you need to analyze geological survey data to pick a site, monitor real-time sensor readings from equipment to ensure safety and efficiency, factor in market trends for oil prices, and adhere to strict environmental and safety regulations. Traditionally, a single AI might struggle to be an expert in all those domains at once. But a collective AI approach could deploy multiple specialized models working as a team. For instance, one LLM (or even a non-LLM model) could specialize in geology and interpret seismic data to suggest promising drilling spots. A second model could focus on operations, analyzing sensor data from rigs and predicting equipment maintenance needs (preventing downtime or accidents). A third could be tuned for financial analysis, forecasting oil demand and prices to time the operations optimally.

Using a coordination algorithm like AB-MCTS, these models could collaboratively iterate on a drilling plan: the geology expert model proposes a location, the operations model checks it against equipment status and safety profiles, the finance model projects the ROI, and they refine the plan together through several “trial-and-error” cycles.

The end result would be a drilling strategy that balances geological potential, operational safety, and profitability – a solution no single model (or human engineer) could have derived as quickly or thoroughly. In effect, you’ve got an AI advisory board for your drilling decision. This could lead to safer operations (because the plan was vetted from multiple angles), lower costs (thanks to predictive maintenance scheduling and optimal timing), and higher yields (by targeting the right site at the right time). In an industry where decisions involve high stakes and massive data, an AI swarm that simplifies complexity and provides clear, data-driven recommendations would be incredibly valuable. (Not to mention, it aligns perfectly with the idea of automating the repetitive analysis – or as we like to say, “Automate the Stupid Sh!t” – so human experts can focus on strategy.

Example: Real Estate – Personalized Client Service at Scale

Now consider a real estate scenario. A brokerage or busy realtor has to juggle market analysis, client preferences, property details, and legal paperwork – all while providing a personal touch to clients. Here’s how a multi-LLM ensemble could help: One AI model could specialize in market data, crunching numbers on recent sales, neighborhood trends, and pricing forecasts. Another model (say a generative language model) might excel at communication, crafting tailored property descriptions or friendly email updates to clients. A third model could be versed in legal/contracts, able to parse through lengthy real estate contracts or local regulations to flag important details. Working together, these AIs can deliver a comprehensive service. For example, suppose a client is looking for a new home. The market-model could identify listings that match the client’s budget and criteria and predict which neighborhoods are rising in value. The language model could take those insights and generate a polished, highly personalized report for the client – “Here are three homes that check your boxes, and one has a price drop expected soon due to market trends.” Meanwhile, before the realtor sends an offer, the legal-focused model could review the contract and disclosure documents, ensuring there are no unusual clauses or missing disclosures, giving both the agent and client peace of mind. The collective result is an AI-augmented workflow where the client gets data-driven advice, custom-tailored communication, and thorough due diligence – all faster than a single generalist AI (or a small human team) could manage on its own. This kind of AI teamwork could let realtors handle more clients with better service: mundane tasks (data analysis, document review) are automated by specialized AIs, freeing the humans to do what they do best – understanding client needs and building relationships. In an industry as personal as real estate, augmenting each step of the process with just the right AI specialist can translate to happier clients and more deals closed.

The Road Ahead: AI Teams, Not Just AI Tools

The idea of AIs collaborating like a human team is still new, and naturally, it will require more validation beyond initial lab results. There’s healthy skepticism in the AI community: will a swarm of smaller models really outshine the single big models in the long run? The early benchmarks are promising, but we’ll need to see independent evaluations and real-world deployments to truly prove the concept 3 . It’s one thing to solve a curated puzzle like ARC-AGI-2; it’s another to tackle messy, unpredictable business problems. That said, this “collective intelligence” approach has opened a door to what might be a new paradigm in AI development 3 . Instead of just racing to build a bigger model with more parameters, we might achieve more by orchestrating ensembles of smart, specialized agents working together 3 . It’s a bit like the microservices idea in software architecture, applied to AI – many components doing one thing well, communicating to handle complexity.

For us at Killer Logic (and for tech leaders in any industry), this shift is exciting. It reinforces a principle we hold dear: smarter and leaner often beats brute force. The notion of “AI teams” also aligns with how we approach solutions in general – pick the right tools, integrate them thoughtfully, and you can move faster and get better results than by trying to force one mega-solution to do it all. We’ll be keeping a close eye on how multi-LLM techniques like AB-MCTS evolve, and we’re already brainstorming ways such AI collaborations could slot into automation solutions for our clients. After all, our mission is to help businesses simplify complexity with speed and clarity blog.killerlogic.io) – and what’s more complex than the frontier of AI? If teaming up AIs can cut through that complexity, it’s something worth exploring.

In the meantime, whether you’re in oil & gas, real estate, or any field facing tough problems, it might be time to start thinking of AI not just as a tool, but as a team member (or rather, a team of team members!). The future of AI could very well be less about a single genius algorithm and more about an ensemble of cooperating minds. And just like a Dream Team, it’s all about how well those players work together.

Supercharge Your Workflow with Generative AI

Curious how these AI innovations could streamline your business? Schedule a free “Using Generative AI to Optimize Your Processes” presentation with Killer Logic. We’ll discuss your goals and identify real opportunities to apply AI for efficiency and impact – offering candid, practical insights (no sales gimmicks). Whether we implement it, you tackle it in-house, or you take our ideas elsewhere, you’ll come away with actionable next steps. Let’s explore how a smarter, leaner approach to tech can deliver something killer for you.